Artificial Intelligence (AI) is one of the more promising technologies in medical imaging of the 21st century so far, with widespread potential in healthcare. New applications are emerging every day in both diagnostic imaging, as well as in many workflows and analytical areas, driven by the changing norms of value-based care and population health management.

Before AI grows exponentially, the authors believe that AI governance is critical to an efficacious and cost-effective implementation of the many AI-based applications. Healthcare providers can learn from other experiences in healthcare, such as cardiovascular information systems. Cardiovascular services tend to be diverse in nature with invasive, non-invasive, and event-recording applications. As systems emerged, no single vendor seemed to have an all-inclusive solution, and consequently different department sections acquired what was best for them. The net effect was a proliferation of systems that, in many cases, did not interoperate well and made assimilation of the comprehensive patient information by the cardiovascular specialist difficult.

Similar in nature has been the application of advanced visualization. There can be multiple departments with advanced visualization needs, such as diagnostic radiology, orthopedics, surgery, etc. Each of these areas may have pursued a particular vendor’s solution—again resulting in a proliferation of systems with overlapping functionality and poor interoperability.

In order to avoid similar experiences with AI, providing proper governance can potentially minimize organizational inconsistencies, inefficiencies, and expenses. We will review the current state of AI through examination of some real-world experiences, and then explore what is needed in AI to avoid the mistakes of the past.

Current State of AI

In order to understand the current state of AI and governance we must understand how AI has evolved. First, let us differentiate between imaging and non-imaging AI. In the broadest sense, AI refers to machines that can learn, reason, and act for themselves. They can make their own decisions when faced with new situations, in the same way that humans and animals can [https://www.technologyreview.com/2018/11/10/139137/is-this-ai-we-drew-you-a-flowchart-to-work-it-out/].These algorithms use statistics to find patterns in massive amounts of data.

Imaging AI focuses on a branch of computer science dealing with the acquisition, reconstruction, analysis and/or interpretation of medical images by simulating human intelligent behavior in computers. Machine learning algorithms are a subset of artificial intelligence methods, characterized by the fact that you do not have to tell the computer how to solve the problem in advance. Instead, the computer learns to solve tasks by recognizing patterns in the data [https://www.quantib.com/the-ultimate-guide-to-ai-in-radiology#what-is-AI-and-how-does-it-work]. By analyzing thousands of similar images looking for specific patterns, the computer is able to predict if a certain pattern is representative of a particular diagnosis.

Non-imaging AI also employs algorithms, but instead of analyzing image content, they may look for patterns in data that are relevant. For example, if a patient has had multiple exams that include calculation of an ejection fraction, an algorithm that examines a vast amount of data looking for ejection fraction values and compares them would be helpful to the clinician. Similarly, an algorithm that uses machine learning to examine a number of patient parameters such as age, mobility, prior visits, etc. might be used to assess whether a patient is likely to be a no-show for an appointment, and recommend preemptive action.

There are three main sources of evolving AI development: research developments; commercial developments; and AI platform development. Many research facilities are developing algorithms for specific factors, both imaging and non-imaging. Conversely, there are a number of commercial companies that are developing algorithms based on the products or services they sell.

Finally, there are companies that are concerned with the delivery of such AI algorithms, and they are focused on developing a platform or marketplace for delivery of potentially their own- or third-party algorithms. This is similar to smartphone applications that are most likely delivered via an “app store” controlled by the phone’s operating system supplier such as Apple or Google. A user may choose to download any number of applications, and typically pays for only the ones they choose to use. A platform with multiple algorithms may be a better sell, but there are concerns about platform “exclusivity.”

One might say the current state of development in all sources are in their infancy. In most cases, it appears that Imaging AI is evolving separately from other areas. According to John Mongan, M.D., associate professor of clinical radiology, associate chair, translational informatics, director, Center for Intelligent Imaging, there is the Center for Intelligent Imaging at the University of California at San Francisco (UCSF) that is evolving a framework for Imaging AI, “We consider the risks for deployment as well as the probability of success and the clinical impact.” Mongan believes AI Governance “should include when and when not to use an AI algorithm. People using it must understand when it is and when it is not useful.”

According to Alex Towbin, M.D., Neil D. Johnson Chair of Radiology Informatics, Cincinnati Children's Hospital Medical Center, “Cincinnati Children’s is in the very early stages of AI. We have one clinical application implemented, and a number of internally developed applications in development. We have also purchased one externally developed stroke application, but have not implemented it yet.” There are a number of applications they have acquired that say they employ AI, but they are not really sure about that. His focus is on automated workflow, which may not directly be AI, but is part of it.

Another example of governance is Brigham and Women’s Hospital, in conjunction with Massachusetts General Hospital. Kathy Andriole, Ph.D., director of research at the MGH and BWH Center for Clinical Data Science states, “There are initiatives in many areas both imaging and non-imaging, including radiology, cardiology, the ICU, pathology, COVID, workflow optimization, and light images.” According to Andriole, “We have a committee across Mass General Brigham that oversees and approves clinical implementation of AI algorithms. The committee decides if and when an AI algorithm is implemented and looks at how to assess different applications.” Andriole indicated that “diagnostic applications get the publicity, but there may be more potential in non-diagnostic algorithms such as workflow.”

The University of Michigan balances between a governing committee and individual department efforts, according to Karandeep Singh, M.D., assistant professor of Learning Health Sciences, Internal Medicine, Urology, and Information at the University of Michigan. Singh chairs the Clinical Intelligence Committee that oversees operational AI activities at Michigan Medicine. While the University as a whole has efforts focused on AI/ML research (e.g., the Michigan Integrated Center for Health Analytics and Medical Prediction [MiCHAMP]) and resources for translation (Precision Health Implementation Workgroup), the Clinical Intelligence Committee's primary focus is on clinical operations. Singh says “there is a mechanism to initiate requests to the committee where a given clinical workflow could benefit from the use of an AI/ML model. In turn, the committee helps clinical stakeholders review and endorse models based on products that are available from vendors or models developed by researchers. In general, a researcher cannot directly bring a model to the attention of the committee but needs to have a clinical partner.” According to Singh, “If an AI/ML model affects a broad set of clinicians or other stakeholders, it requires review by the committee.”

According to Gary J. Wendt, M.D., professor of radiology and vice chair of informatics, University of Wisconsin Madison (retired) “there is a long-standing governance body in place that also addresses AI, including an approval process, but it doesn’t appear there is any coordination with non-imaging applications.”

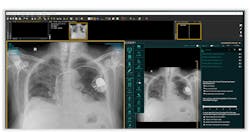

Imaging AI use cases are currently not diagnosing diseases but are merely a tool to extract data abnormalities in imaging studies or for quantifying physical parameters such as blood flow rates or volumetric information from imaging data sets. These results are then presented to the diagnostician, e.g., as a pop-up overlay screen on a PACS display. The radiologist can then consider if these data are meaningful for augmenting the clinical diagnosis.

Other AI system configurations can use the AI-detected abnormalities or quantified data to accelerate the triage workflow process to present the AI prioritized results to the radiologist. In the future there will likely be more and varied use cases.

What is Needed for AI Governance?

Given the current state of AI, it is not too soon to consider governance within your institution. The first point to consider is the differentiation between imaging AI and non-imaging AI applications. Is there sufficient overlap between these areas to warrant a common governance?

Using radiology services as an example, AI imaging applications such as a stroke detection algorithm able to differentiate between Intracranial hemorrhage (ICH) and a large vessel occlusion (LVO) usually receive the greatest amount of publicity. However, workflow orchestration applications that guide the caregiver automatically thru the clinical data such as medical history, lab results, etc. and then intelligently prioritize the worklist study selection may be just as valuable.

Wendt stated, “Knowing the results of an AI analysis on an image five minutes before it is read may not be as valuable as having relevant information for that exam available at the time the study is interpreted.” Furthermore, Wendt and Towbin both suggest that AI image analysis may be more beneficial outside imaging services in improving the time to treat a lesion that has been analyzed by an AI algorithm.

Contrast these examples with an AI algorithm that evaluates the current patient schedule for a department, where the algorithm predicts potential no shows and improves the actual percentage of patients that show up for an exam. Such a non-imaging algorithm may have a more beneficial economic impact on the department than an imaging application.

Regardless of scope, there is value in some form of governance and oversight to guide AI deployment. The University of Michigan, the University of California at San Francisco, and Brigham and Women’s/Massachusetts General all appear to have working committees who play a role in AI applications oversight. In all these cases these were committees not necessarily established specifically for AI. Therefore, it may be easier to utilize existing infrastructure to provide governance than to start from scratch.

A governance body should be multi-disciplinary to ensure all relevant services are addressed when considering the merits of an AI application. This is particularly important when considering AI applications that cut across multiple services and clinicians, such as a pre-diabetic algorithm that may impact a general practitioner, endocrinologists, ophthalmologist, and potentially others.

In the case of the University of Michigan, the feeling is that a service line such as radiology may be sufficiently self-contained with respect to some AI algorithms that they can self-manage AI governance. For example, an algorithm that analyzes images for a specific cancer, or a workflow prioritization algorithm may only affect the radiologist, and therefore may have little impact outside radiology.

The concept of an AI orchestration platform has been expressed by multiple AI vendors as a means for managing AI applications. These platforms are similar in concept to mobile phone vendor “app stores” in that they can offer multiple applications in one place, usually for a subscription fee. The intent of these platforms is to enable a vendor to approve a number of different applications, whether they be internally developed or from a third-party source, thereby making it easier for an institution to use. While in theory these platforms can simplify the accessibility and use of AI applications, not every vendor’s platform may have all the applications an institution is interested in, likely resulting in the need for multiple platforms.

According to Mongan, AI implementers should look to the DICOM (Digital Imaging Communications) standard as an example of a successful deployment methodology model to follow for interface standards for deployment of AI. The DICOM standard has grown to be a successful means of digital image sharing by establishing a standardized format for a variety of images. Creating a standards group and defining a standard means for conveying an AI algorithm would be a tremendous boon to the acceptance of AI applications.

Implementing AI can be enhanced by following the experiences of others, including:

- Identify AI application activity: There are likely areas already that are working on or using AI algorithms. Understanding where there is already activity, and if it benefits more than the service responsible can help determine the best place for governance.

- Define an AI implementation strategy: A well-defined strategy is fundamental to AI Governance. It should include who is responsible for governance authority for the enterprise as well as for individual services, as well as governance processes.

- Determine the highest priority AI areas: Some AI applications will have localized value, whereas others may have enterprise-wide value. For example, an algorithm that assists in the identification of small lesions may have value within radiology, whereas one that predicts no-show rates may have more universal value. An institution will need to have clearly defined policies relative to the criteria for determining the value of an AI application. Is it monetary? Clinical? Other?

- Establish governing bodies to review and approve AI applications: The value of AI governance can be enhanced by establishing a process for the review and approval of AI applications. This may assist in avoiding redundancy in similar algorithms, as well as provide better interoperability across service lines.

Summary

According to Towbin, “We already have algorithms today, but it is probably two or three decades away from large segments of our work being automated with hundreds or thousands of algorithms firing at once.” Towbin also believes “the real value of AI is when it’s doing things I can’t do.” He further believes that “the first 100 years in radiology has been qualitative medicine, while the next 100 years will be quantitative.”

Wendt is skeptical of AI cost justification, “You need to get the CFO on board, as anything that will show a readmissions reduction will be favorable.” Similarly, Dr. Wendt believes that the potential of a platform for AI will optimize interoperability and minimize training. As for where AI algorithms will reside, Wendt is concerned that EHR vendors are reluctant to get involved as it may involve 510(k) regulatory requirements.

A central theme from several contributors is the value of standardization. Both Singh and Mongan stress the importance of standardization. Mongan suggests looking to DICOM (Digital Imaging and Communications) standard, as “it was the biggest success story in imaging,” while Singh believes that physician organizations (e.g., American Society of Nephrology or American College of Radiology) would be effective groups for AI guidance, but models and technologies affecting different types of stakeholders need deep collaborative work for algorithms to be both accepted and helpful.

Many AI Imaging algorithms are considered “Software as a medical Device” or SaMD, by the FDA. As such, only FDA-approved AI applications should be used by diagnosticians.

There are multiple algorithms approved by the FDA, often for the same application. An example would be for stroke detection. Having a multitude of algorithms with similar functionality proliferating thru a multi-hospital IDN would significantly increase operational cost without a guaranteed improvement in patient care quality. This is contrary to a strategy for achieving the Triple Aim, a framework for simultaneously achieving an improved patient experience, improving the health of populations, and reducing healthcare cost [Overview | IHI - Institute for Healthcare Improvement, Tiple Aim for Populations].

An imaging governance committee (IGC) for vetting and standardizing all AI applications makes sense. The IGC could be dedicated to specific service lines of an IDN, such as an imaging service. The IGC should consist of different IDN stakeholders, such as medical, legal, regulatory, IT, clinical engineering and others. The IGC could also manage other domains besides AI, such as the standardization of other assets such as PACS in the imaging service line across the IDN. Finally, the IGC should have representation of the IDN’s C-level governance.

As the authors have experienced in many IDNs, lack of coordination and lack of standardization is often the cause of an “intra service lines” breakdown and is at the origin of unnecessary costs and quality issues with care delivery. It also causes “inter-communication” issues between different service lines leading to even more care delivery quality issues. In contrast, standardization leads to quality improvements and cost savings. These actions result ultimately in better patient satisfaction, and as such enables achieving the trifecta of the Triple Aim. IDNs need to standardize processes and technologies. In order to achieve the Triple Aim in AI deployments, they also need to implement IDN-wide and service line-specific AI governance.

Joseph L. Marion is Principal for Healthcare Integration Strategies, LLC and and Henri Primo is Principal for Primo Medical Imaging Informatics.