An open-source platform called the Medical Open Network for Artificial Intelligence (MONAI) is gaining momentum in its efforts to help address the challenges of integrating deep learning into healthcare.

Duke AI Health in North Carolina recently hosted a presentation about MONAI by Stephen Aylward, Ph.D., chair of the MONAI advisory board, and the senior director of strategic initiatives for Kitware Inc., a company involved in the research and development of open-source software in the fields of computer vision, medical imaging, visualization, 3D data publishing, and technical software development.

“We've got 600,000 downloads so far, and that number is rapidly increasing,” said Aylward. “We actually just had the 1.0 release in September of this year. The first release was in April of 2020, so it's amazing how fast things are going. We have 150 individual contributors from around the world."

MONAI is software at its heart, Aylward explained, but it also addresses data and community building. “Its goal is to accelerate the pace of research and development by providing a common software foundation and a vibrant community for medical image deep learning,” he said. “Again, it is not just software, but it really is about creating that community, so that software becomes community-supported. When a new publication comes out, written by an expert, that expert uses MONAI to implement that method, and then that method becomes available within MONAI.”

MONAI began as a project between AI computing company Nvidia and King's College London, but now has a large community of supporters. Among MONAI’s contributors are Vanderbilt University, Guy’s and St. Thomas’ NHS Foundation Trust, King’s College London, Stanford University, and Mayo Clinic

It's based on Pytorch, and builds on existing standards such as DICOM and FHIR, Aylward said. “It's easy to learn and very well documented, but it's really optimized for dealing with medical image data and deep learning within that field. And it prioritizes reproducibility.”

He talked about MONAI being made up of several components, which are summarized on the MONAI website:

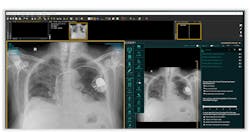

• MONAI Label is an intelligent image labeling and learning tool that uses AI assistance to reduce the time and effort of annotating new datasets. By utilizing user interactions, MONAI Label trains an AI model for a specific task and continuously learns and updates that model as it receives additional annotated images.

• MONAI Core is the flagship library of Project MONAI and provides domain-specific capabilities for training AI models for healthcare imaging. These capabilities include medical-specific image transforms, state-of-the-art transformer-based 3D Segmentation algorithms like UNETR, and an AutoML framework named DiNTS.

• MONAI Deploy aims to become the de-facto standard for developing packaging, testing, deploying, and running medical AI applications in clinical production. MONAI Deploy creates a set of intermediate steps where researchers and physicians can build confidence in the techniques and approaches used with AI — allowing for an iterative workflow.

Other new components include a MONAI Model Zoo and Federated Learning. MONAI Model Zoo hosts a collection of medical imaging models in the MONAI Bundle format. A bundle includes the critical information necessary during a model development life cycle and allows users and programs to understand the purpose and usage of the models.

As the MONAI website notes, federated learning is emerging as a promising approach for training AI models without requiring sites to share data, which is important in medical use cases, since there are privacy and regulatory restrictions to sharing data.

“Let’s say that Duke Radiology and UNC Radiology both have wonderful CT scans of the pancreas,” Aylward explained. “They want to go ahead and train a neural network that's better than any other neural network. Duke says, OK, UNC give us all your data, and UNC says no, you give us all your data. Sharing your data is always a challenge, but with federated learning, you can train a single model on datasets at different locations without ever sharing those datasets, and the model constantly improves.”

Aylward also spoke about the value of the modular approach of MONAI. He described how a graduate student at MIT looked at five to seven different neural networks and wanted to see what the performance of these neural networks was going to be for doing a particular segmentation problem.

The student then came up with a new approach that merged the results of those five different networks together to produce a result that was better than any one of those individual networks. “The neat part about this being open source is the fact that they did this on some publicly available data,” Aylward said. “If that student had tried to implement those five different techniques on their own, let alone combine them into something new, that would have taken years, but this was a publication that was completed in a matter of months by the student, because they were able to take these modules, combine them together and compare them with one another, and use metrics that existed within MONAI to make a paper that would not have been feasible prior to MONAI. So it's really outstanding how it's supporting open science in that regard.”

A MONAI “Deploy” work group is seeking to define how to close the existing gap from research and development to clinical production environments by bringing AI models into medical applications and clinical workflows with the end goal of helping improve patient care. The focus will include defining the open high-level functional architecture and determining which components and standard APIs are required.

Following a phased approach, the deploy working group will concentrate on what the end-to-end experience should look like first. Some of these challenges include:

- Packaging trained AI models into medical executable applications

- Lightweight inference for local validation

- Model orchestration, deployment, serving and inference across multiple compute nodes.

- Consume multi-modal data pipelines, starting with medical imaging, DICOM-based first, and patient data coming from EHR next.